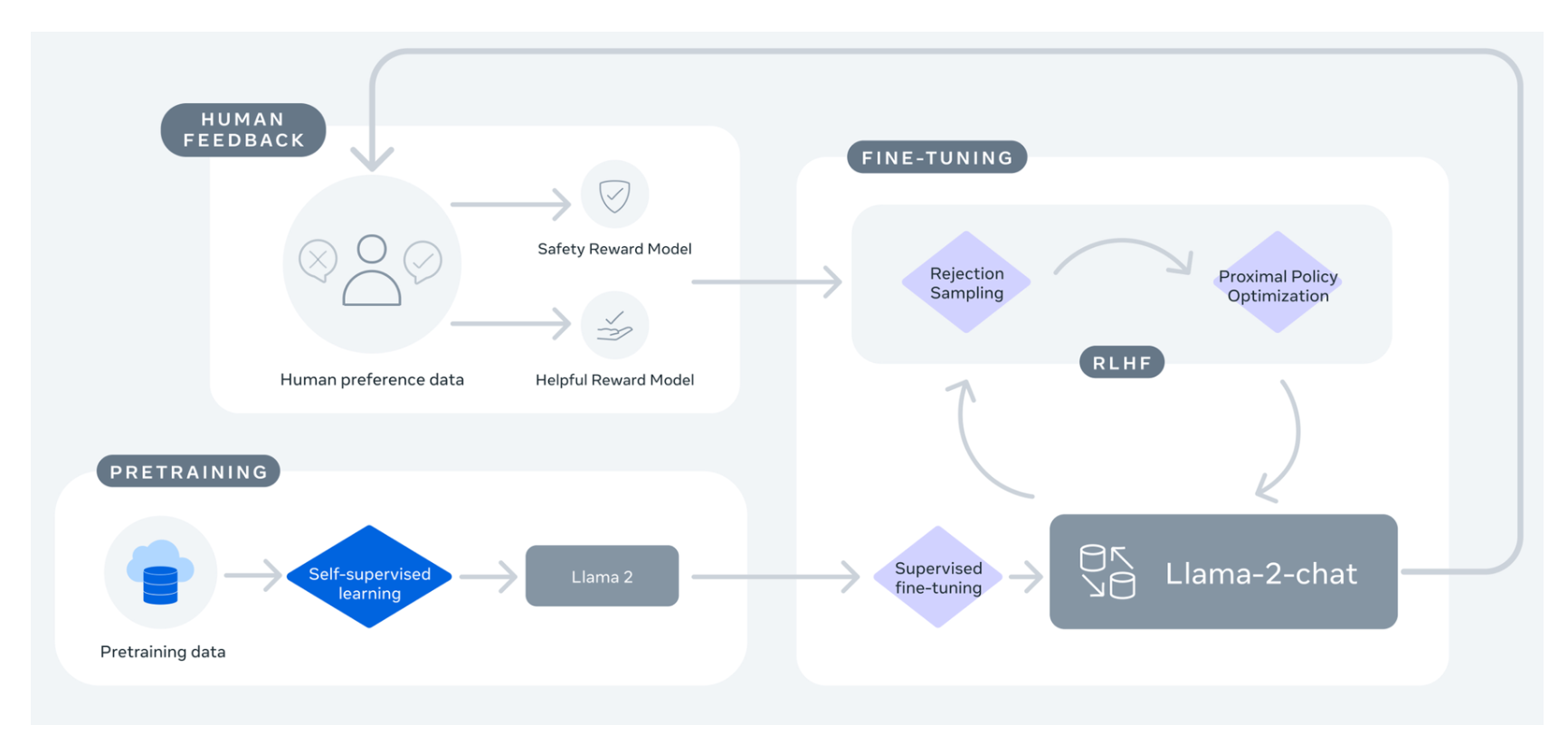

Fine-tune LLaMA 2 7-70B on Amazon SageMaker a complete guide from setup to QLoRA fine-tuning and deployment on Amazon SageMaker Deploy Llama 2 7B13B70B on Amazon SageMaker a. How to train with TRL As mentioned typically the RLHF pipeline consists of these distinct parts A supervised fine-tuning SFT step the process of annotating data with. The tutorial provided a comprehensive guide on fine-tuning the LLaMA 2 model using techniques like QLoRA PEFT and SFT to overcome memory and compute limitations. In this blog post we will look at how to fine-tune Llama 2 70B using PyTorch FSDP and related best practices We will be leveraging Hugging Face Transformers Accelerate and. In this section we look at the tools available in the Hugging Face ecosystem to efficiently train Llama 2 on simple hardware and show how to fine-tune the 7B version of Llama 2 on a..

Fine-tune LLaMA 2 7-70B on Amazon SageMaker a complete guide from setup to QLoRA fine-tuning and deployment on Amazon SageMaker Deploy Llama 2 7B13B70B on Amazon SageMaker a. How to train with TRL As mentioned typically the RLHF pipeline consists of these distinct parts A supervised fine-tuning SFT step the process of annotating data with. The tutorial provided a comprehensive guide on fine-tuning the LLaMA 2 model using techniques like QLoRA PEFT and SFT to overcome memory and compute limitations. In this blog post we will look at how to fine-tune Llama 2 70B using PyTorch FSDP and related best practices We will be leveraging Hugging Face Transformers Accelerate and. In this section we look at the tools available in the Hugging Face ecosystem to efficiently train Llama 2 on simple hardware and show how to fine-tune the 7B version of Llama 2 on a..

Fine-tune LLaMA 2 7-70B on Amazon SageMaker a complete guide from setup to QLoRA fine-tuning and deployment on Amazon SageMaker Deploy Llama 2 7B13B70B on Amazon SageMaker a. How to train with TRL As mentioned typically the RLHF pipeline consists of these distinct parts A supervised fine-tuning SFT step the process of annotating data with. The tutorial provided a comprehensive guide on fine-tuning the LLaMA 2 model using techniques like QLoRA PEFT and SFT to overcome memory and compute limitations. In this blog post we will look at how to fine-tune Llama 2 70B using PyTorch FSDP and related best practices We will be leveraging Hugging Face Transformers Accelerate and. In this section we look at the tools available in the Hugging Face ecosystem to efficiently train Llama 2 on simple hardware and show how to fine-tune the 7B version of Llama 2 on a..

Comments